The Neuro-Acoustics of Focus: How AI Redefines the Boundaries of Silence

Update on Dec. 20, 2025, 11:41 a.m.

In the architectural landscape of the modern workplace, silence has transitioned from a natural state to a manufactured commodity. As remote work and open-plan offices become the dominant paradigms, the human auditory system is increasingly under siege by a chaotic symphony of background interference. The challenge is no longer just “blocking noise” but intelligently isolating the human voice from the auditory smog. This shift from passive obstruction to intelligent extraction, driven by Neural Network-based Digital Signal Processing, is fundamentally altering how we perceive productivity and collaboration.

The Cognitive Cost of Auditory Chaos

Human hearing is physiologically designed to prioritize sudden changes in sound—an evolutionary trait intended for survival. In a modern office or home environment, this means the brain is constantly scanning and processing non-essential stimuli: the hum of an air conditioner, the clatter of keyboards, or distant domestic conversations. This continuous background processing exerts a significant “cognitive load.” Research in psychoacoustics suggests that even moderate noise levels can increase cortisol production and lead to decision fatigue, reducing the capacity for deep creative work.

The traditional response to this has been passive noise reduction—thick padding and heavy earcups designed to act as physical barriers. While effective for high-frequency sounds, passive barriers struggle with the low-frequency drones typical of modern infrastructure. This led to the development of Active Noise Cancellation (ANC), which uses destructive interference to “neutralize” noise. However, even traditional ANC often treats all sound equally, sometimes inadvertently muffling the very human voices we need to hear clearly during critical digital interactions.

The AI Revolution: From Filtering to Extraction

The real breakthrough in communication clarity hasn’t come from better foam, but from smarter silicon. Artificial Intelligence-based noise cancellation differs from traditional methods by utilizing machine learning models trained on millions of hours of audio data. These models are capable of differentiating between “noise” and “signal”—understanding the specific spectral signature of a human voice versus the randomized frequencies of a barking dog or a crying infant.

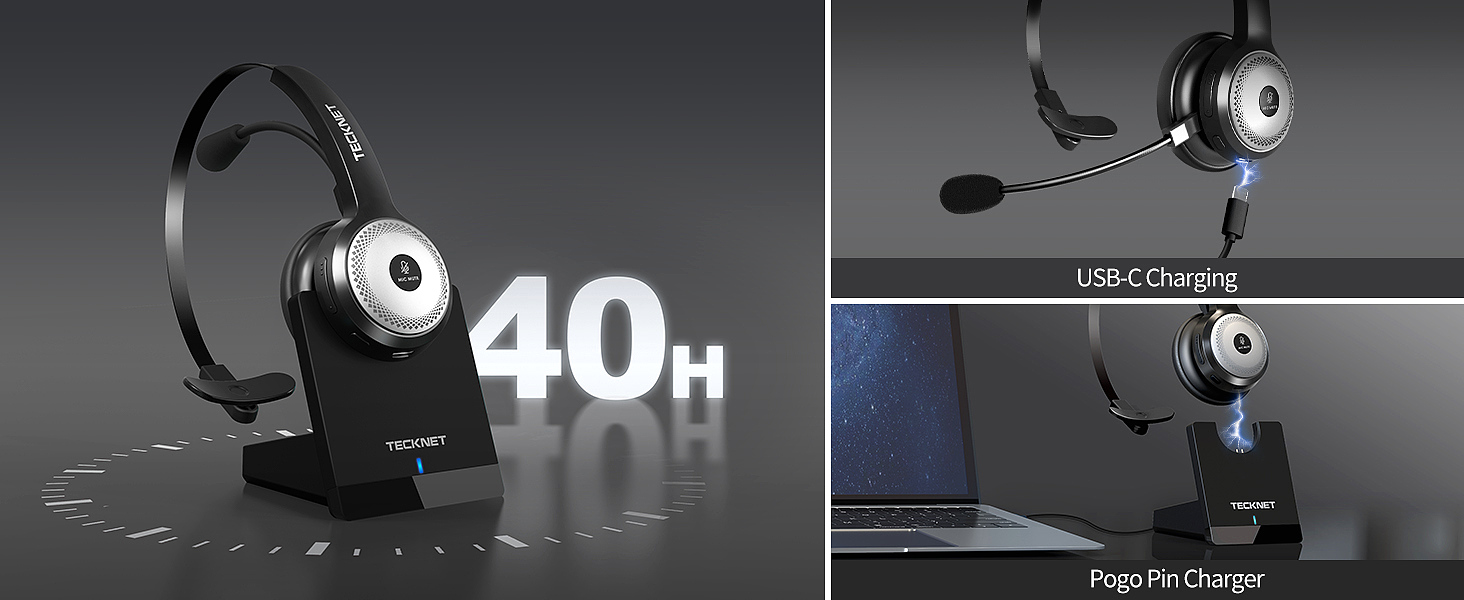

This technology utilizes Digital Signal Processing (DSP) to analyze incoming sound in real-time, often at thousands of samples per second. By identifying the vocal harmonics, the system can dynamically suppress nearly all non-human frequencies. This is not merely a filter; it is a reconstructive process that ensures the listener on the other end receives a clean, isolated vocal track regardless of the sender’s environment. Devices like the TECKNET TK-HS003 Wireless Headset exemplify this paradigm shift, employing specialized AI algorithms to achieve up to 99.8% noise suppression, effectively creating a “virtual sound booth” around the user.

Adaptive Communication in Hybrid Ecosystems

As the barriers between professional and domestic spaces dissolve, the technology must adapt to a multi-point connectivity model. The modern knowledge worker often switches between a PC-based video conference and a mobile-based client call within seconds. This necessitates a more robust wireless infrastructure. Bluetooth 5.0 and multi-point pairing technologies have moved from luxury features to foundational necessities for maintaining workflow continuity.

The evolution of these systems also considers the physical placement of hardware. For instance, the transition to rotatable microphone arrays allows for precise beamforming—directing the software’s “attention” toward the source of the voice while ignoring peripheral interference. The integration of such technology into lightweight frames ensures that the high-tech processing power doesn’t become a physical burden over long workdays. This synergy between software intelligence and mechanical adaptability is the hallmark of the current era of telecommunication gear.

The Future of Synthetic Environments

Looking ahead, the integration of AI in acoustics will likely lead to “context-aware” silence. We are moving toward systems that don’t just block all noise, but selectively allow certain frequencies through based on situational importance—a parent hearing a specific alert while maintaining a professional call, for example. The democratization of high-end DSP, as seen in accessible devices like the TECKNET TK-HS003, is just the first step. As these algorithms become more efficient, we will see a permanent decoupling of professional clarity from physical location.

The ultimate goal of acoustic engineering is to make technology invisible. When the person on the other end of a 3,000-mile digital bridge hears you as clearly as if you were in the same room, the technology has succeeded. By leveraging AI to conquer the fundamental physics of noise, we are not just making calls better; we are expanding the human capacity for focus and connection in an increasingly loud world.